Here is another example with the original image reduced in size and inset within itself. The original image is from Wikimedia.

-

-

You can fight snapping pressing the Ctrl key down when moving or transforming the layer.

You can also disable all snapping helpx.adobe.com/photoshop/using/positioning-elements-snapping.html -

Here is an image of mine with an inset at half size and an inset at double size. It's obvious that the doubling in size of the image makes everything in it look closer, while the halving in size makes everything look further away. Clear proof that the statement: "true perspective depends only on the camera-to-subject distance" is false. Perspective also depends on the image size (for a fixed viewing distance).

The whole point of showing one image as an inset in the other is to make it as easy as possible to think of the two separate images as all part of the same scene. It then becomes obvious that our perception of depth in the image depends on the magnification of that part of the image.

When we look at any 2-D photograph, it is very difficult to make a judgement of the absolute distances of objects from the camera when the photo was taken. Perception of absolute distance depends on the size of the image and our viewing distance from the image. We know this from our own experience and hence effectively "switch off" our usual depth perception because we know it will not work.

However, we can still judge relative distances in the image as they do not depend on the image size or viewing distance. Combining two images to appear to be one scene allows us to make relative judgements of distance between different versions of the same scene even though we find it very difficult to judge absolute distances in the scene.

Of course, the best comparison is between the photograph and the real scene (as viewed from the original camera position). That is an experiment that cannot be done online. However, it is well worth doing if you can manage it.

Take a photo of a scene which you can visit again. Print the photo at a convenient size and then go back to where the photo was taken and stand in the exact camera position and hold the print up in front of you. Find the distance from your eye (shut the other eye) at which you need to hold the print for it to exactly match the scene behind it. Every object in the photo then appears to be in exactly the same position as the real object behind it.

That gives you the viewing distance for correct perspective.

If the print is held closer to your eye, everything in it looks bigger and closer. If the print is held further away, everything in it looks smaller and further away.

-

Tom you have made your point very well with the last example. It is an intriguing exercise. The original itself is a good one to view closer than normal and then further away, trying to remove any preconceived bias from one's mind. It may be counter-intuitive to some, and it was done to death in another thread with examples that weren't so obvious, but that one is pretty clear to me.

-

[deleted]

-

Forget the inserts.

Sit at your normal viewing distance and gaze at the original for a few seconds and make an estimate of the distance between the train and the walker.

Now move as close as you can to your screen, look at it for a bit, and make another estimate.

Now move as far back in your chair as you can, look again for a bit and make a 3rd estimate.Even simpler method, view the image fullscreen, make your estimate.

Now view it 1:1, and now what is your estimate?We need to be able to turn off the rational mind and allow the clues from each viewing distance to prevail.

Q.E.D.

-

[deleted]

-

Oh my, out the window with all scientific endeavour then?

Does your universe revolve around the earth???

-

[deleted]

-

I don't have to be a scientist. Quite the opposite in regard to your comment:

@JACS has written:If we have to make an effort to see what we do not otherwise see, the "proof" has failed.

If I see a proof and you don't, you expect me to believe it is false???

-

@JACS has written:

Sorry, this is a clear proof that the perspective depends on the clues we get from the frame regardless of the viewpoint. The only reason that inset may look farther away is because it is an insert. Remove the frame around it, and the perception changes.

Indeed; and, no matter what size the said frame is or what the viewing distance is, the relative angular sizes of e.g. the man and the train in the frame do not change, FWIW. Which could mean that Adams' angle of convergence does not change with image viewing distance either.

I like angular measure ... what was good enough for Lord Rayleigh et al is good enough for me.

-

[deleted]

-

@xpatUSA has written:@JACS has written:

Sorry, this is a clear proof that the perspective depends on the clues we get from the frame regardless of the viewpoint. The only reason that inset may look farther away is because it is an insert. Remove the frame around it, and the perception changes.

Indeed; and, no matter what size the said frame is or what the viewing distance is, the relative angular sizes of e.g. the man and the train in the frame do not change, FWIW.

The image is a 2D rendering of a 3D scene. The information from the 3D scene, including angular measure, are retrievable (or may be inferred) if, and only if, the relative viewing distance is the same as the original capture distance.

But the angular measure of the viewer to the image most certainly does change as the viewer moves closer or further away, which changes the relative angular measure and therefore the perspective.

Relative viewing distance: A ratio of the image size to the viewing distance.

Quoted message:Which could mean that Adams' angle of convergence does not change with image viewing distance either.

The angle of convergence of the original scene is what it was at time of capture. But to infer that from a 2D image, we need a similar relative viewing distance.

Quoted message:I like angular measure ... what was good enough for Lord Rayleigh et al is good enough for me.

I like angular measure too. It preserves perspective (relative distance), all things being equal. Equal in this case being the viewing distance relative to the object distance.

Our brains use cues within the image to infer distances. We know the approximate sizes of the train and the man. I took a photo of a Lace Lizard that my dog had chased up a small tree. These lizards grow to about 2 metres long and maybe 75 - 100mm diameter. I was surprised in the photo that it looked about 200mm thick and heaps longer - more like a Komodo dragon. The tree was no more than 75mm diameter but, in the photo, due to perspective distortion, the tree looked bigger and therefore also the lizard.

-

@JACS has written:@Bryan has written:

If I see a proof and you don't, you expect me to believe it is false???

Yes because I am much more likely to be right.

Hmmm...

Quoted message:I make living with writing, reading and teaching proofs.

Which is why, when I need my car fixed, I go to the mechanic, not the one who wrote the manuals.

-

@Bryan has written:@xpatUSA has written:@JACS has written:

Sorry, this is a clear proof that the perspective depends on the clues we get from the frame regardless of the viewpoint. The only reason that inset may look farther away is because it is an insert. Remove the frame around it, and the perception changes.

Indeed; and, no matter what size the said frame is or what the viewing distance is, the relative angular sizes of e.g. the man and the train in the frame do not change, FWIW.

The image is a 2D rendering of a 3D scene. The information from the 3D scene, including angular measure, are retrievable (or may be inferred) if, and only if, the relative viewing distance is the same as the original capture distance.

But the angular measure of the viewer to the image most certainly does change as the viewer moves closer or further away, which changes the relative angular measure and therefore the perspective.

I like angular measure too. It preserves perspective (relative distance), all things being equal. Equal in this case being the viewing distance relative to the object distance.

You are missing my point.

Say at some zoom on my screen, the train subtends x rad from my eye and the man y rad ... the ratio between them is x/y of the train relative to the man. If I zoom out X2 then the train now subtends x/2 rad and the man y/2 ... since x/y = (x/2)/(y/2) nothing has changed in the ratio betwixt the train and the man. Similarly if I zoom in nX, nx/ny still = x/y.

Same if I crop and same if I re-sample and same for all reasonable viewing distances.

[edit]The business of insets is a red herring ...[/edit]

-

[deleted]

-

[deleted]

-

@TomAxford has written:Quoted message:

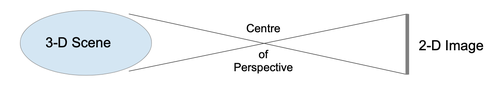

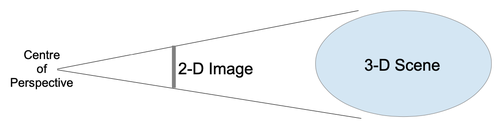

Correct perspective is said to be obtained when a print is viewed in such a way that the apparent relation between objects as to their size, position, etc., is the same as in the original scene. This is achieved when the print is viewed at such a distance that it subtends at the eye the same angle as was subtended by the original scene at the lens. The eye will then be at the centre of perspective of the print, just as, at the moment of taking, the lens was at the centre of perspective of the scene.

... 'The Manual of Photography' by Jacobson et al. (Focal Press, 7th edition, 1978), pages 85-6.

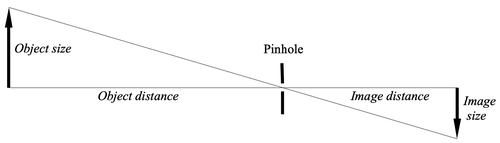

Correct perspective simply means the perspective seen from the camera position. For correct perspective to be seen when viewing a photograph, the viewer's eye must be located at the centre of perspective of the photograph. The distance between the centre of perspective and the photograph is approximately equal to the focal length of the camera lens multiplied by the enlargement factor.

When the photograph is taken:

For a pinhole camera, the pinhole is the centre of perspective.When the image is viewed in front of the scene itself:

In this diagram, when viewing the image from the centre of perspective, everything in the image lines up exactly with the scene beyond.The basic mathematics of perspective is given by the equation:

object size / object distance = image size / image distance

Wide-angle perspective distortion is seen when the viewer is further away from the image than the centre of perspective. Telephoto compression is seen when the viewer is closer to the image than the centre of perspective. We are not at all sensitive to small changes in perspective when viewing photographs, so the changes need to be large before the perspective distortion becomes readily noticeable.

I am confused by the use of the term "center of perspective" in the above, which elsewhere in the world of AI simply means "vanishing point" and:

a) can be anywhere on an image or even outside of it and:

b) there can be more that one vanishing point (often three) associated with a single image.

If only I understood that, the OP might make some sense ...